Development Environment

Once you’ve read up on our Stack, you will want it running locally. You will need access to GCP. Once you’ve been given access to it, read Terraform on how to setup a service account in order to create and download the key json file.

Visual Studio Code Setup

When you open the top project ink VSCode the recommended extensions and project settings will automatically configure your environment.

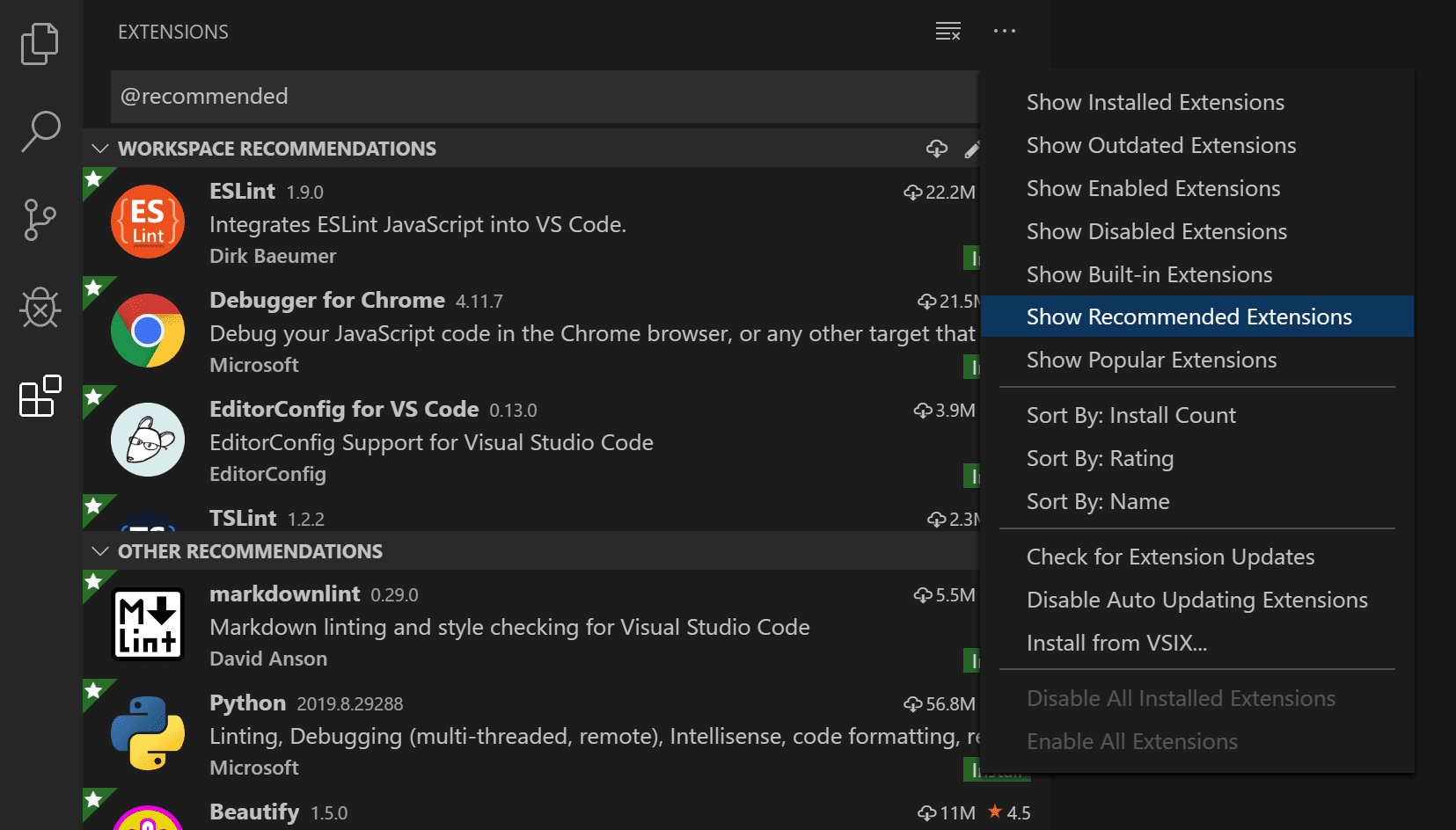

Extensions Setup

When you open the top project, a prompt will alert you to install the project’s workspace recommended extensions.

Workspace Recommended Extensions should come up as a prompt when you first open the Archipelo top project.

The top project’s extensions are defined via the file top/.vscode/extensions.json:

- Docker - ms-azuretools.vscode-docker - nice to have Docker support in VSCode

- Go - golang.Go - no Go support in VSCode without it

- Terraform - HashiCorp.terraform - Terraform file support

- Markdown All in One - yzhang.markdown-all-in-one - ensures our documentation Markdown validates

- Markdown Preview Styling Github - bierner.markdown-preview-github-styles - makes Markdown look like Github's markdown so you get a consistent style between what you see in VSCode preview and what you will see in the Github repo

- Python - ms-python.python - you can't do Python in VSCode without it

- PyLance ms-python.vscode-pylance - Python in VSCode was awful until PyLance. Everyone uses it

- Jupyter ms-toolsai.jupyter - you can't run Python code inline in VSCode without this plugin

- autoDocstring - Python Docstring Generator - njpwerner.autodocstring - this is the only way to get a team to actually write proper docstrings for Python code. There is literally no other way in the universe. I've used it to great effect

- Github Pull Requests and Issues - enables the Github tab on the left side of VSCode, lets you see helpful information about your PR or branch in your IDE

- Google Cloud Code - GoogleCloudTools.cloudcode - Interact with GCP directly from your editor. (Optional)

- ESLint - dbaeumer.vscode-eslint - Static code analysis tool for identifying problematic patterns found in JavaScript code

Project Settings

The project settings file at top/.vscode/settings.json will automatically configure formatting for JavaScript and more advanced features for Python.

Global Configuration

- Editor rulers - 90 / 120

JavaScript

- Tab size - 2

Python

- Tab size - 4

- Format on save - True

- Organize imports with isort - True

- Language Server - PyLance

- Linting enabled - True

- Formatting provider - black

- Sort imports arguments -

--profile black- make isort black compliant - Pylint enabled - False - we use Flake8

- Flake8 enabled - true

- Flake8 arguments -

-config=python/.flake8- use our Flake8 configuration - autoDocstring format - Google

GCP Credentials

Put your credentials file under $HOME/.config/archipelo/gcp-key-archipelo-dev.json

Manage GCP credentials using gcloud CLI

One Time Setup

(For a Docker-only setup, see below)

# Install Packages

brew install \

go \

node \

pnpm \

postgresql

# Setup PostgreSQL

brew services start postgresql

createdb archer

createdb identity

createdb analytics

# Install NPM Modules

cd js

pnpm i

# Start

cd go

go install ./...

~/go/bin/ardev

As you make changes the Go web server and the TypeScript will compile automatically and trigger live reload.

Setting up Database

Learn more about how to setup your local database by visiting the following page: Database

Running unit-tests

Go unit-tests

To run all the unit test of the Go codebase, run the follow command:

cd go

go test -v ./...

JS unit-tests

To run all the unit test of the JS codebase, run the follow command:

cd jsapps

pnpm check -r

Docker Setup

If you prefer to develop without installing project dependencies, a docker-compose setup also exists. Then:

cd docker

docker-compose up -d

This will run postgresql, opensearch, ardev (pnmp and go). You can debug within ardev container using VSCode extensions for Go and Python and Remote Containers.

Tests can also be run this way:

docker-compose exec -w /code/go ardev go test ./...

docker-compose exec -w /code/js ardev pnpm check

Exposing local service in the internet with ngrok

Sometimes, it is necessary to expose a service running on a local machine with a public DNS, e.g. to test whole data pipeline with Cloud Tasks queues running in GCP and batch jobs running on a local machine.

Ngrok is a tool for exposing local servers behind NATs and firewalls to the public internet over secure tunnels. It is very easy to use and serves its purpose well. You download a package from here, unzip it, connect to your free account and then run another command to expose a locally running service. In return, you get a public DNS name, which you can use for testing, and which changes every time you run ngrok.

$ ./ngrok http 9000 # local arspider

portngrok by @inconshreveable (Ctrl+C to quit)

Session Status online

Account Darek Król (Plan: Free)

Version 2.3.40

Region United States (us)

Web Interface http://127.0.0.1:4040

Forwarding http://9db4c55d95f2.ngrok.io -> http://localhost:9000

Forwarding https://9db4c55d95f2.ngrok.io -> http://localhost:9000

Connections ttl opn rt1 rt5 p50 p90

0 0 0.00 0.00 0.00 0.00

Now you can hit https://9db4c55d95f2.ngrok.io with curl from anywhere, including Cloud Tasks queues.

curl -v -X POST --data '{}' "https://9db4c55d95f2.ngrok.io/github/repo/list/

Crawling limits

You can specify crawling limits for NPM and GitHub pipelines via terraform variables or env variables.

When using terraform please set the following variables in /tf/dev.auto.tfvars (0 means no limits):

// number of npm packages to crawl

arspider_npm_crawllimits_packages = "1000"

// max github repo id to crawl

arspider_github_crawllimits_maxrepoid = "3000"

// how many chunks (100 each) of github repo related items (e.g. issues, prs, ...) you want to crawl for each processed repo

arspider_github_crawllimits_page = "1"

// how many github repo will be processed/refreshed

arspider_github_crawllimits_repolimit = "1"

These variables correspond to env variables:

# number of npm packages to crawl

AR_SPIDER_NPM_CRAWLLIMITS_PACKAGES="1000"

# max github repo id to crawl

AR_SPIDER_GITHUB_CRAWLLIMITS_MAXREPOID="3000"

# how many chunks (100 each) of github repo related items (e.g. issues, prs, ...) you want to crawl for each processed repo

AR_SPIDER_GITHUB_CRAWLLIMITS_PAGE="1"

# how many github repo will be processed/refreshed

AR_SPIDER_GITHUB_CRAWLLIMITS_REPOLIMIT="1"

You are now ready to use your development environment.